Designing the Infrastructure for Responsible AI

Daiki

Customer

Ongoing

Duration

Co-Founder

Role

Freelance

Context

English

Language

Objective

AI is transforming every industry – yet most teams still lack the tools, processes, and clarity to develop AI systems that are not only innovative, but also legally compliant, socially responsible, and ethically sound.

At Daiki, our goal was to design a platform that operationalizes AI governance: making accountability and transparency tangible, actionable, and integrated into daily workflows. We set out to answer critical questions:

We set out to answer critical questions:

- How might AI teams develop responsibly without slowing down?

- How might regulation become a product feature rather than a roadblock?

- What does “compliance by design” look like in the age of generative AI?

Learning Path

As Strategic Designer and Co-Founder, I led the product discovery and design process from the ground up, shaping both vision and execution:

Strategic Positioning: Helping define Daiki’s role in a rapidly emerging market – not just as a compliance tool, but as a trust infrastructure for AI teams

Qualitative Research: Conducting deep-dive interviews with AI engineers, compliance officers, data scientists, and founders to surface unmet needs, blockers, and mental models

Product Design & UX: Synthesizing research insights into information architecture, wireframes, and flows – and iterating closely with users and our tech team

Brand & Messaging: Translating regulatory complexity into clear, confident narratives that resonate with practitioners

The process was rooted in Design Thinking, with fast loops of insight, ideation, prototyping, and testing – always in close alignment with regulatory foresight and technical feasibility

AI governance is both urgent and ambiguous. While the EU AI Act and other frameworks are taking shape, many teams still perceive compliance as an afterthought – or avoid it altogether.

Key challenges included:

Market Readiness: Helping teams see value in governance before it becomes mandatory

Perceived Friction: Reframing compliance from “checklist fatigue” to “strategic enabler”

Complexity Translation: Turning legal requirements into intuitive, actionable UX patterns

Cross-Functional Buy-In: Designing for teams with wildly different mental models – from engineers to ethics boards

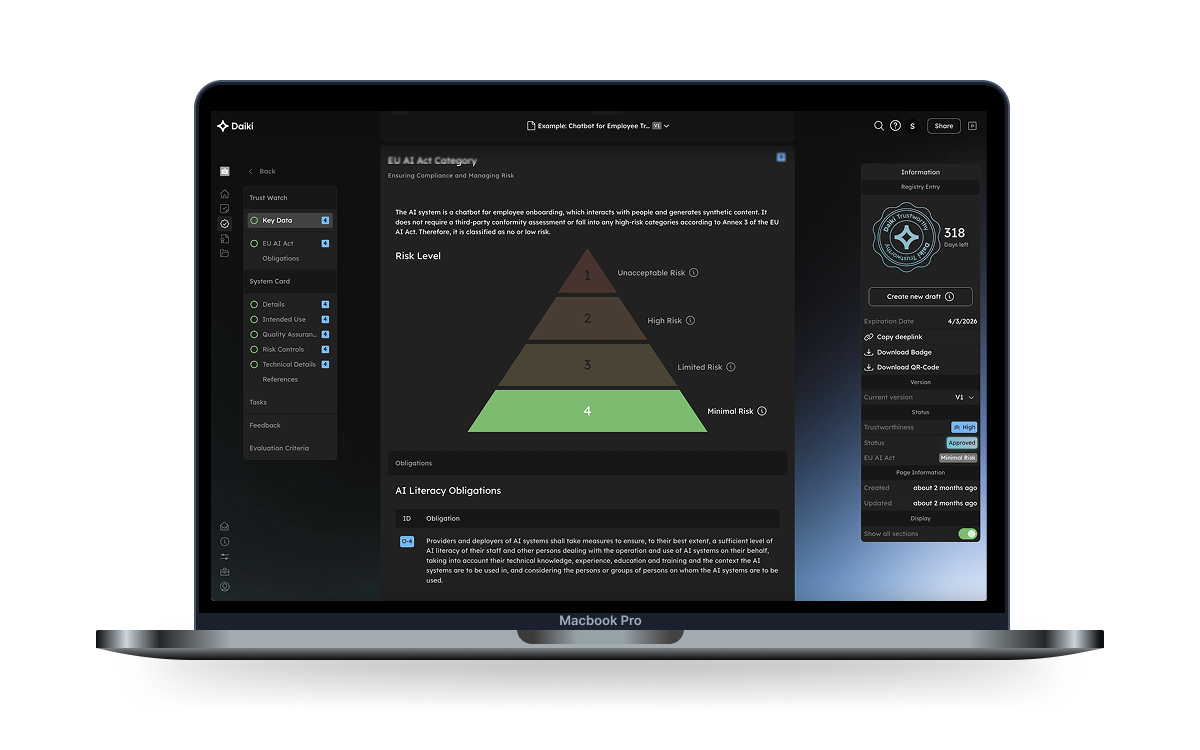

The result is a SaaS platform that empowers teams to integrate responsible AI practices into every stage of the development lifecycle.

The product includes two core modules:

QMS for AI: A Quality Management System tailored to the unique risks and life cycles of AI systems – supporting internal alignment, documentation, and audit-readiness

AI Registry: A structured way to register, track, and evaluate AI models – enabling transparency, versioning, and “trustworthiness tagging” across an organization

By embedding regulatory thinking directly into the product, Daiki enables teams to build with confidence, navigate compliance proactively, and ultimately increase the credibility and societal acceptance of their AI solutions.

Are You Ready To Start?

“Daiki doesn’t just help teams meet requirements – it helps them raise the standard of what good AI looks like.”